Cerebras Systems' Wafer Scale Engine 3 Sets New Standards in AI Inferencing

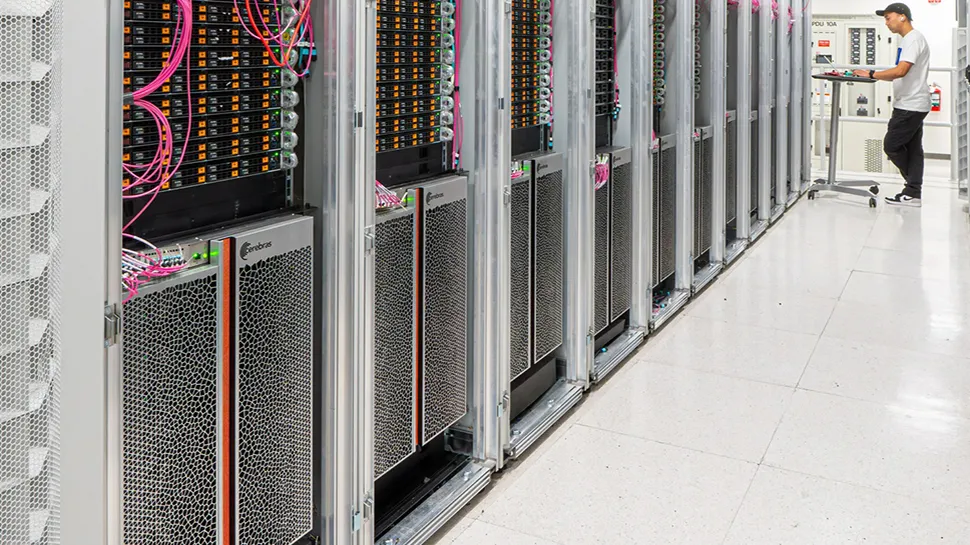

Cerebras Systems Unveils Wafer Scale Engine 3

Cerebras Systems has officially introduced the Wafer Scale Engine 3, a game-changing innovation in AI inferencing. This extraordinary chip features a million cores, allowing it to outperform any competitors, especially Nvidia’s DGX100.

Key Features of Wafer Scale Engine 3

- 44GB of ultra-fast memory

- Massive parallel processing capabilities

- Accessible for hands-on testing at no cost

This chip represents a giant leap forward in hardware capabilities for AI applications, enabling unprecedented processing speeds and efficiency.

Implications for AI and Computing

The emergence of such hardware signifies a shift in technology: organizations can now achieve faster AI inferencing tasks without the heavy dependencies established by existing technologies like Nvidia.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.