Apple Confronts Issues with Nonconsensual Porn Generators and Deepfake Apps

Apple's Struggle Against Deepfake Applications

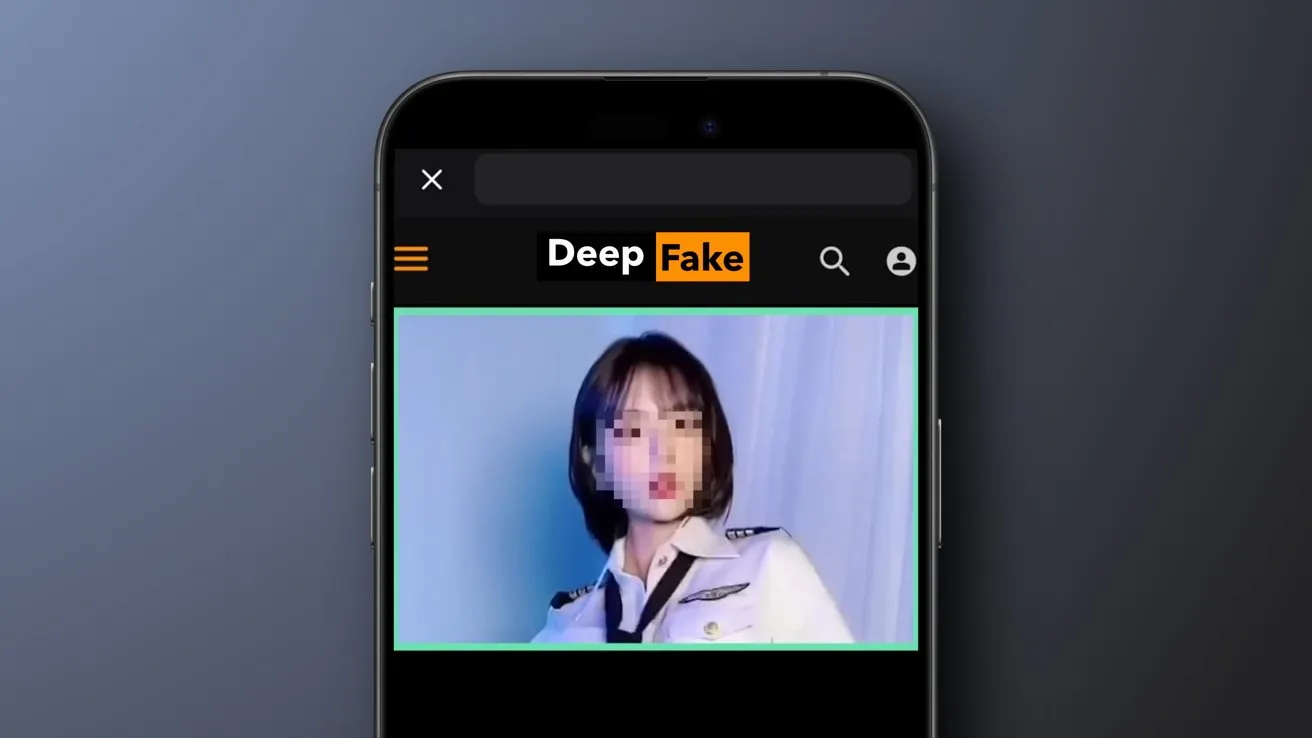

Apple has been facing considerable challenges with the surge of so-called dual-use apps that masquerade as harmless but are actually designed to create nonconsensual deepfake porn. These applications pose significant risks to user privacy and security, placing Apple in a difficult position regarding its app store policies.

The Rising Threat of Nonconsensual Content

The prevalence of deepfake technology is alarming, as malicious users exploit these tools with potentially devastating consequences. Apple Inc. has been under pressure to enforce stringent regulations on the App Store and minimize the impact of apps that contribute to this troubling trend.

Industry Response and Apple's Position

As companies like Microsoft and Google also navigate the challenges of emerging technologies, Apple must find a balance between innovation and user safety. To tackle this issue, a proactive approach involving collaboration with security experts and constant evaluation of apps is essential.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.