Artificial Intelligence Threat: The Cycle of Generative A.I. Training

Understanding the A.I. Threat Cycle

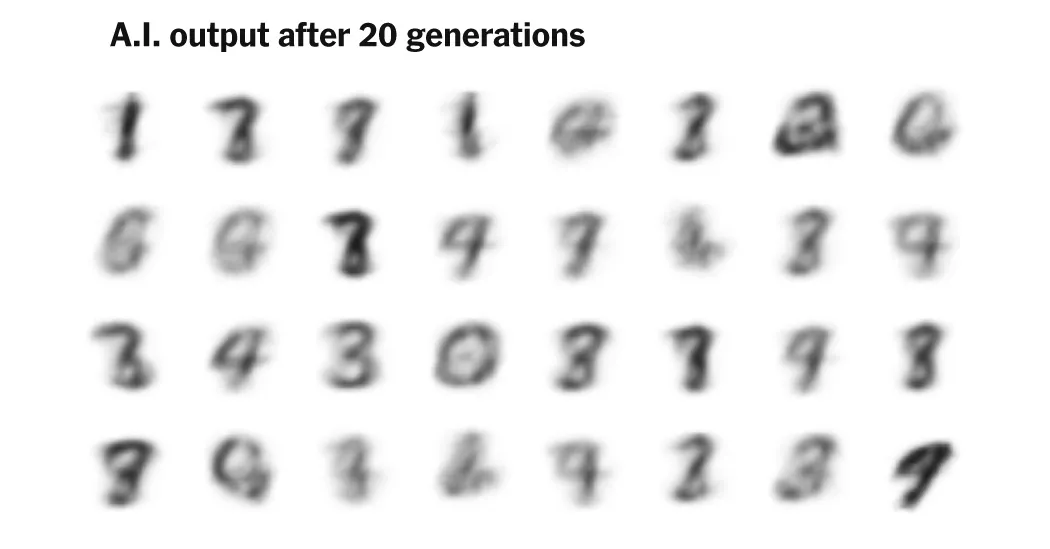

In the tech industry, artificial intelligence is revolutionizing how we interact with computers and the internet. However, a worrying phenomenon arises when A.I. models are trained predominantly on their own outputs, leading to diminishing returns and quality.

Research Insights

- Training on generated content can skew results.

- This self-referential training cycle can degrade performance.

- Industry researchers caution against unlimited reliance on A.I. outputs.

Conclusion on the Implications

As advancements in artificial intelligence continue, it's crucial to monitor these cycles to protect the integrity of A.I. in the tech industry and ensure the quality of innovations.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.