NIST Launches Innovative Machine Learning Tool for Evaluating AI Model Risks

NIST Releases a Machine Learning Tool for Testing AI Model Risks

The National Institute of Standards and Technology (NIST) has released a groundbreaking machine learning tool that is set to transform how developers assess risks in their artificial intelligence models.

Key Features of the Tool

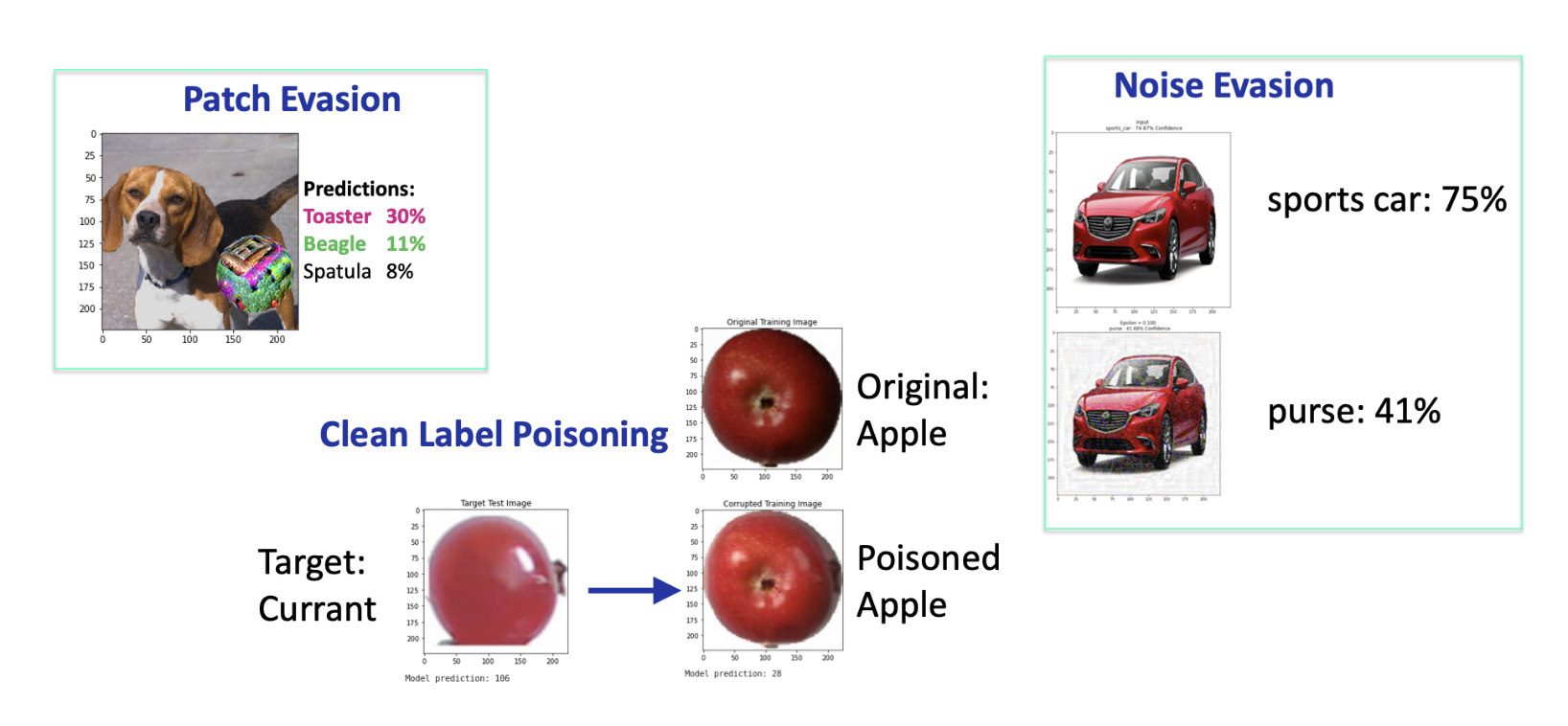

- This tool evaluates potential vulnerabilities in AI applications.

- It provides developers with insights to mitigate risks effectively.

- The initiative aims to enhance the safety and reliability of AI technology.

Conclusion

NIST's new tool is a major step forward in ensuring responsible AI development and adoption, helping organizations navigate the complexities of machine learning while prioritizing safety.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.