Maximizing LLM Experimentation with AWS Tools

Wednesday, 24 July 2024, 19:01

Introduction

The advancement of large language models (LLMs) has transformed the landscape of machine learning. To maximize experimentation with LLMs, leveraging powerful tools is essential.

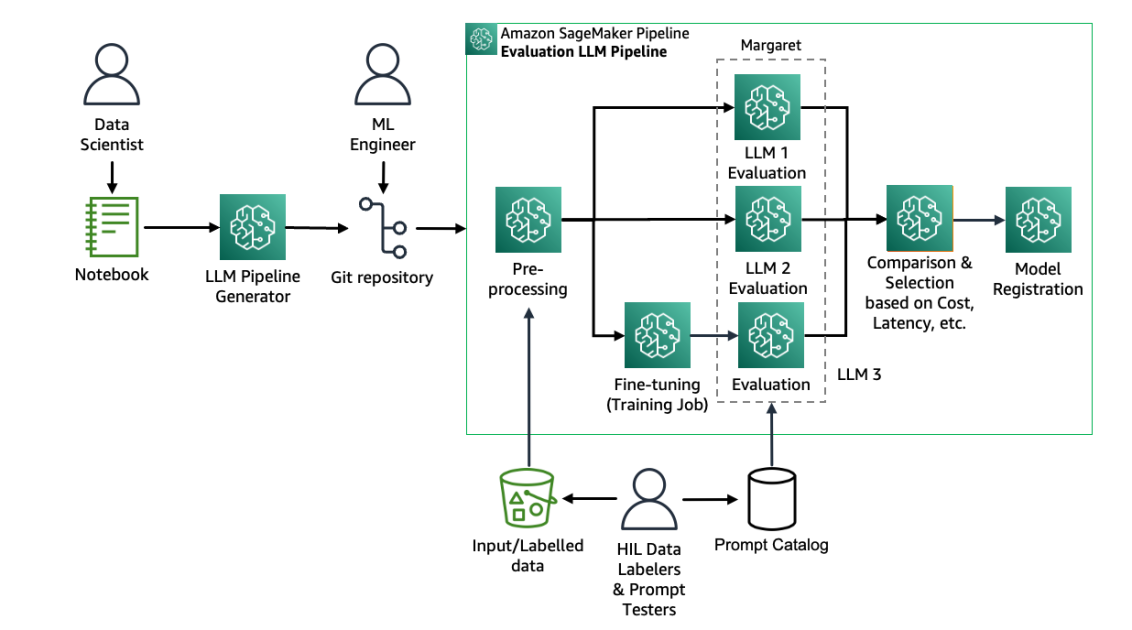

Benefits of Amazon SageMaker Pipelines

- Efficient workflow management

- Scalable experiments

- Seamless integration with other AWS services

Using MLflow for Experiment Tracking

- Track and manage experiments

- Collaborate effectively on model development

- Maintain reproducibility of results

Conclusion

By combining the capabilities of Amazon SageMaker Pipelines with MLflow, organizations can enhance their LLM experimentation processes, leading to better insights and outcomes.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.