AI Insights: The Risks of Anthropomorphism in Technology

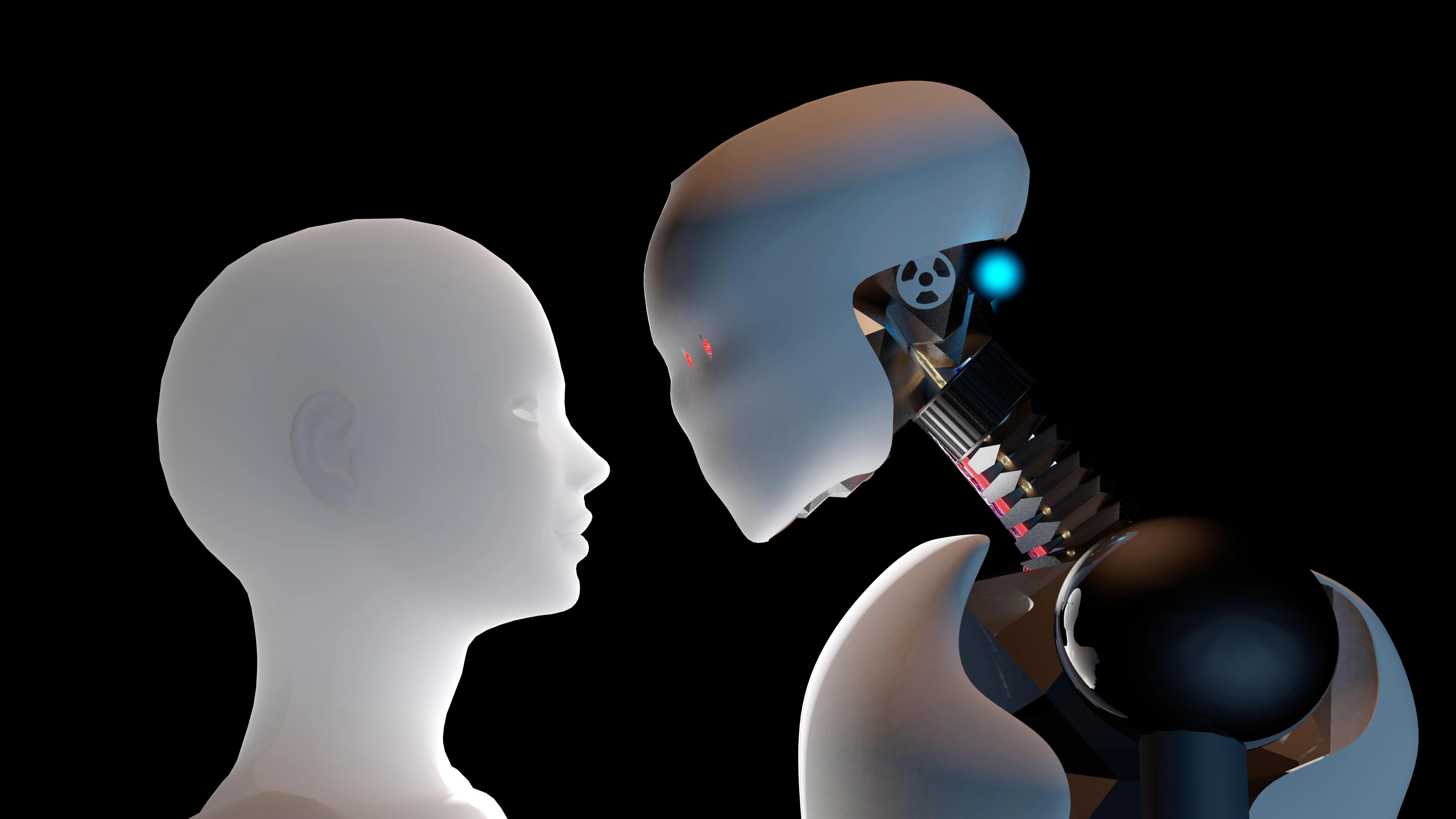

The Illusion of Emotion: AI and Anthropomorphism

The rise of AI has stirred a profound conversation about how we perceive these technologies. The temptation to view AI, like AlphaZero, as capable of true emotion is fraught with misinterpretations. Notable figures like Josef Weizenbaum, through his creation of ELIZA, warned against such assumptions. This article explores the intricacies of anthropomorphism and its potential dangers in how we interact with emerging technologies.

Understanding Weizenbaum's Legacy

Through ELIZA, Weizenbaum illustrated the risks of confusing programmed responses with genuine emotion. Today, given the advancements in AI, examining such misnomers is more relevant than ever:

- Technological Misinterpretation of AI responses

- Ethical Concerns surrounding AI-human interaction

- Potential imitation of human-like behavior by machines

Conclusion: Steps Forward

Addressing these challenges involves a crucial acknowledgment of AI's limitations while fostering innovation in technology.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.