AI Systems: Trust Issues and the Illusion of Knowledge

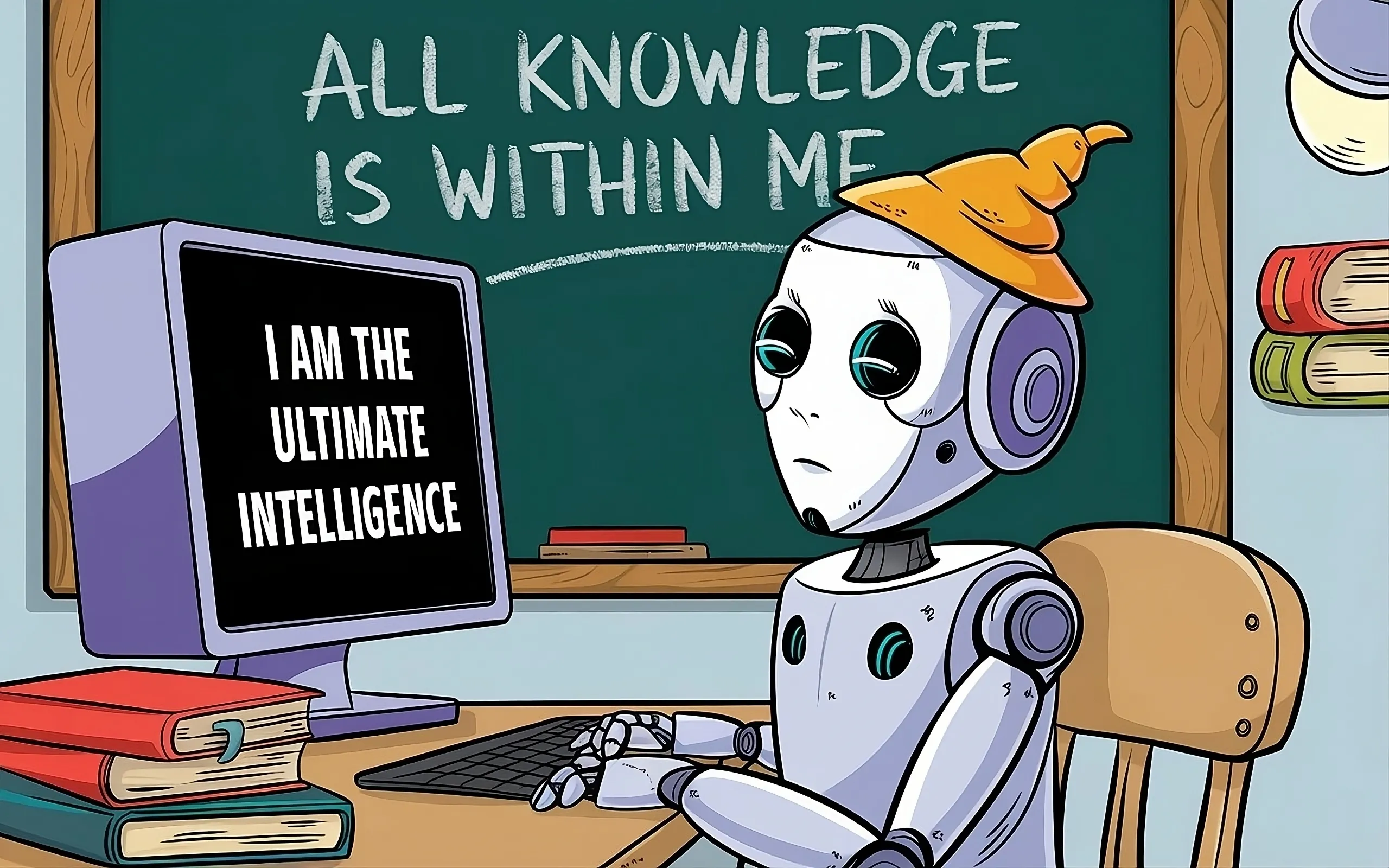

AI: The Illusion of Knowledge

Many users view AI as omniscient entities, yet a closer look reveals critical gaps in their reasoning capabilities. This discrepancy casts doubt on AI's reliability. As these systems generate responses, they do so without a clear path of logic, ultimately leading to a misrepresentation of their knowledge.

Rethinking AI Trust

To restore trust, it's essential for AI developers to explore transparency. Sharing reasoning mechanisms could bridge the understanding gap, allowing for informed interactions with these technologies.

Addressing AI Limitations

- Transparency: Key to User Understanding

- Trust-Building Techniques

- Future Innovations in AI

Conclusion: A Knowledge Gap

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.