Dailymail Insights: Banks’ Strategies for Money Protection Against AI Voice Cloning Scams

Understanding AI Voice Cloning Scams

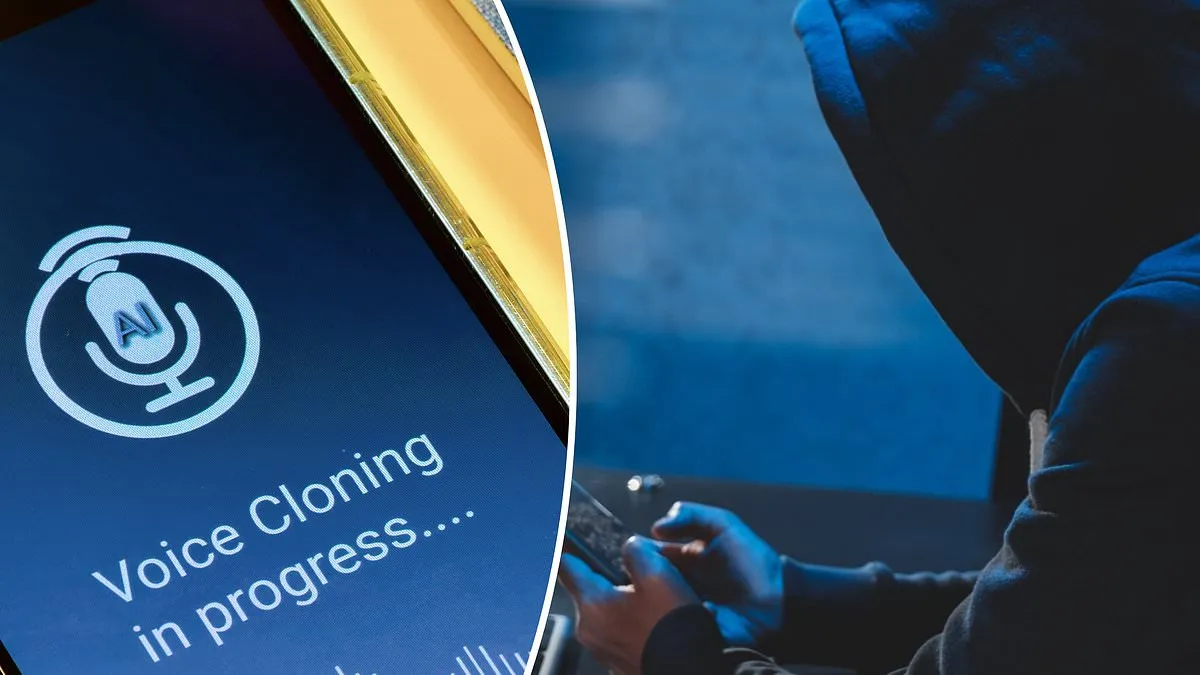

AI voice cloning scams are becoming increasingly sophisticated, posing significant risks to unsuspecting consumers.

The Rising Threat

With advancements in technology, these scams are more convincing than ever. Criminals can replicate voices, tricking individuals into divulging sensitive information, potentially leading to financial loss.

Banks' Protective Measures

In response to these threats, banks are implementing several crucial strategies to protect customer money:

- Enhanced Verification Processes: Institutions are requiring additional authentication steps for voice transactions.

- AI Detection Systems: Innovative algorithms are being deployed to identify and flag suspicious calls.

- Customer Education: Financial institutions are informing the public about the risks of voice cloning and how to recognize fraudulent calls.

Collaborative Efforts

Banks are also collaborating with technology companies to stay ahead of evolving scams, ensuring that customers' money remains secure.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.