AI and Tech: The Competitive Landscape for Inference Performance

The Rise of AI Inference

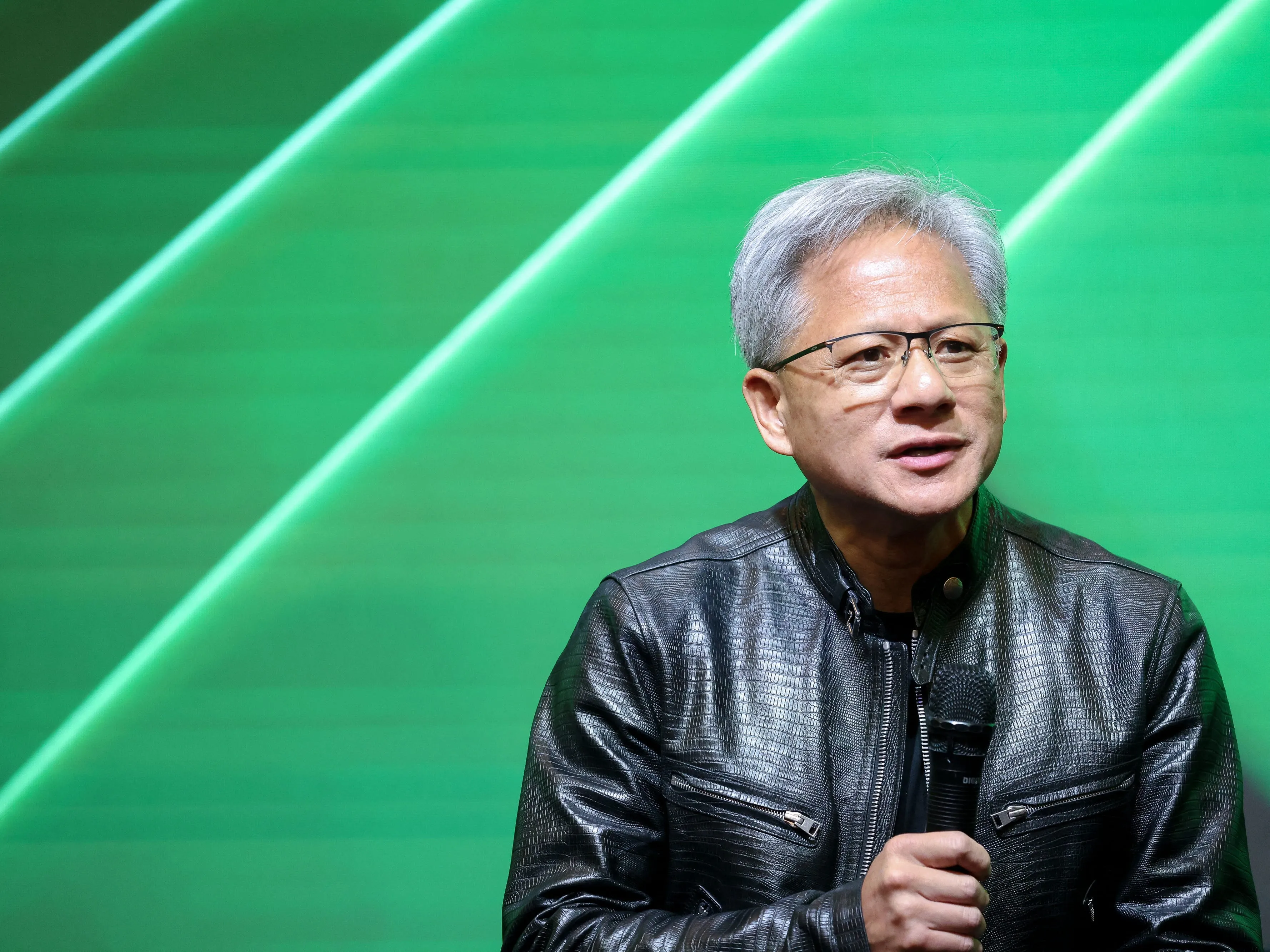

Nvidia's current dominance in the AI market is put to the test as competitors emerge. Inference, the critical stage following AI model training, is shifting from a fledgling state to a major focus for tech companies. Reports from last month highlighted that Nvidia's data center workloads have surged to 40% inference, with predictions that this figure could reach 90% soon.

Emerging Competitors in AI

- SambaNova Systems, founded by Rodrigo Liang, represents a significant challenge to Nvidia.

- The company relies on reconfigurable dataflow units (RDUs), aiming to provide competitive advantages over traditional GPU architectures.

- Other startups are also entering this rapidly evolving landscape, focusing heavily on inference computing.

As the demand for efficient AI computing grows, established players like Nvidia are being scrutinized closely by potential rivals. Analysts project significant changes ahead as new architectures prove themselves.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.