California's Landmark AI Deepfake Legislation Sparks Controversy Ahead of Elections

California's AI Deepfake Legislation Explained

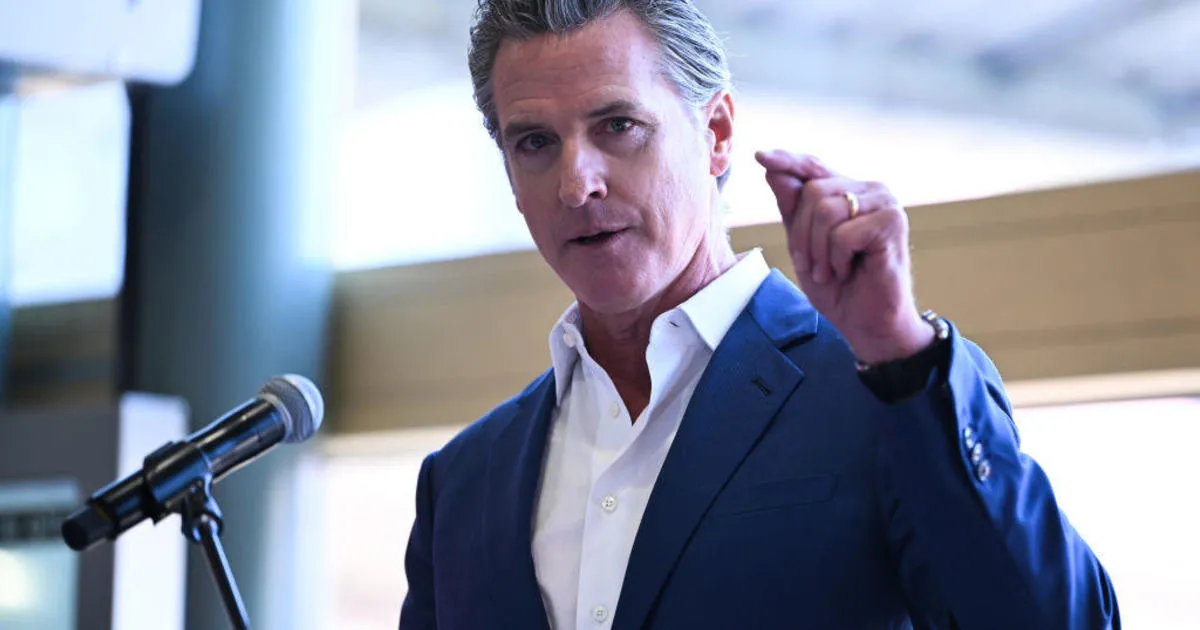

California has recently enacted stringent laws aimed at crack down on election deepfakes, a move catalyzed by Governor Gavin Newsom at a prominent artificial intelligence conference in San Francisco. These rules are designed to ban the use of AI to generate deceptive images and videos in political advertisements, particularly as the 2024 elections approach.

Challenges and Implications

- Legal Challenges: Two of the new laws are facing lawsuits in Sacramento, raising questions about free speech.

- Damages Lawsuit: Affected individuals can now seek damages over misleading AI-generated content.

- Platform Responsibilities: Major social media platforms like X must remove deceptive content starting next year.

Impact on Political Discourse

The legislation aims to clarify the distinction between parody and harmful deepfakes, with the governor's office asserting that satire remains unhindered. However, the controversial lawsuit, stemming from a video altered with Vice President Kamala Harris's audio, underscores the potential risks to free expression, especially as figures like Elon Musk interact with these developments.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.