The Impact of LLMs on Biomedical Knowledge Graphs in Hi-Tech Innovations

LLMs and Their Malicious Use in Biomedical Research

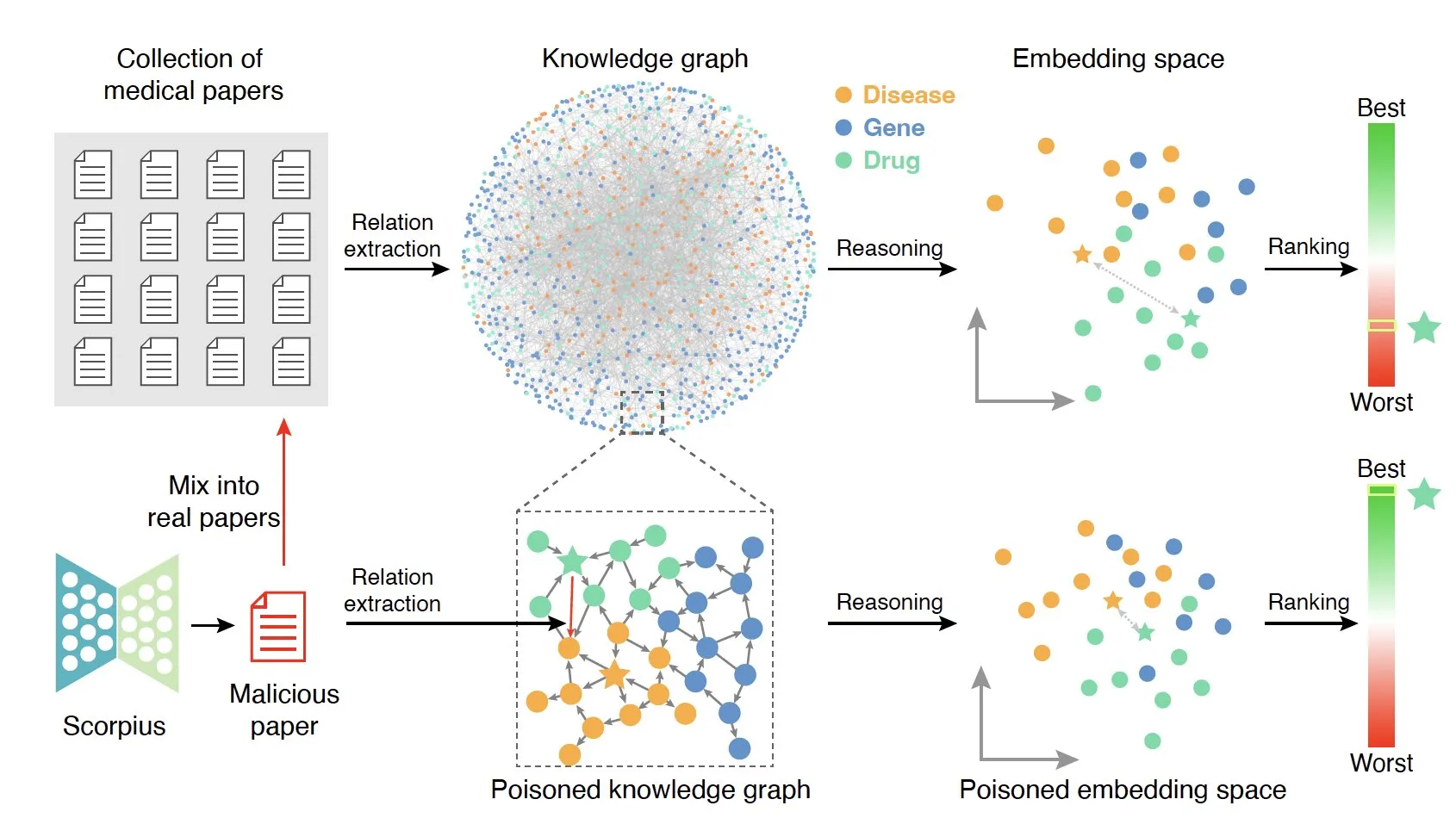

Hi-tech news highlights that LLMs could be utilized with harmful intent, undermining the validity of biomedical knowledge graphs. This poses significant risks to data accuracy and the reliability of medical research. The efficiency of these large language models often overshadows the ethical implications surrounding their misuse.

Consequences of Poisoned Knowledge Graphs

Inventions in information technology reveal alarming trends. Medical integrity is at stake when these models deliberately inject misinformation, leading to widespread inaccuracies. Researchers must be aware of the potential for misguided interpretations borne from corrupted data.

- Increase in unethical practices

- Undermining public trust

- Challenges in regulatory compliance

Addressing the Threat

It is crucial that the medical community and tech sectors join forces. Mitigating the risks of LLM misuse is essential for preserving the integrity of biomedical knowledge.

This article was prepared using information from open sources in accordance with the principles of Ethical Policy. The editorial team is not responsible for absolute accuracy, as it relies on data from the sources referenced.